Xinrong Hu, Chen Yang, Jin Huang, Lei Zhu, Ping Li, Bin Sheng, Tong-Yee Lee, Senior Member, IEEE

0 Abstract

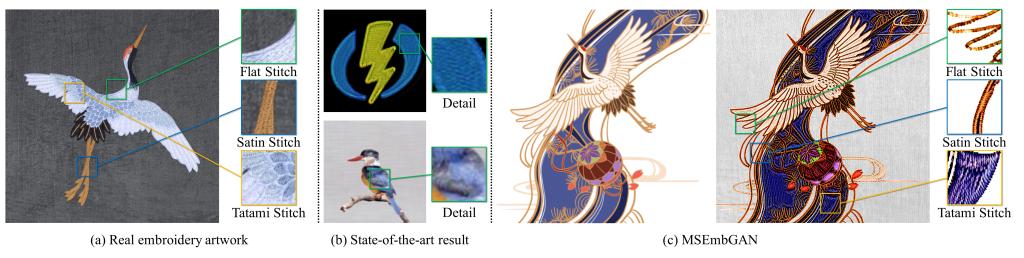

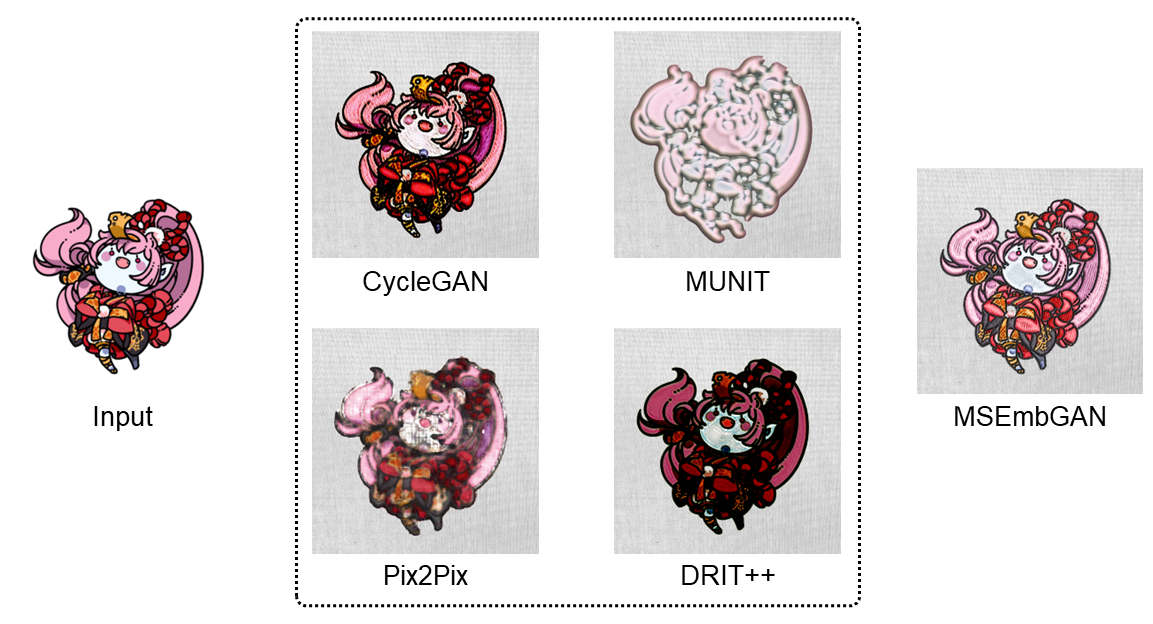

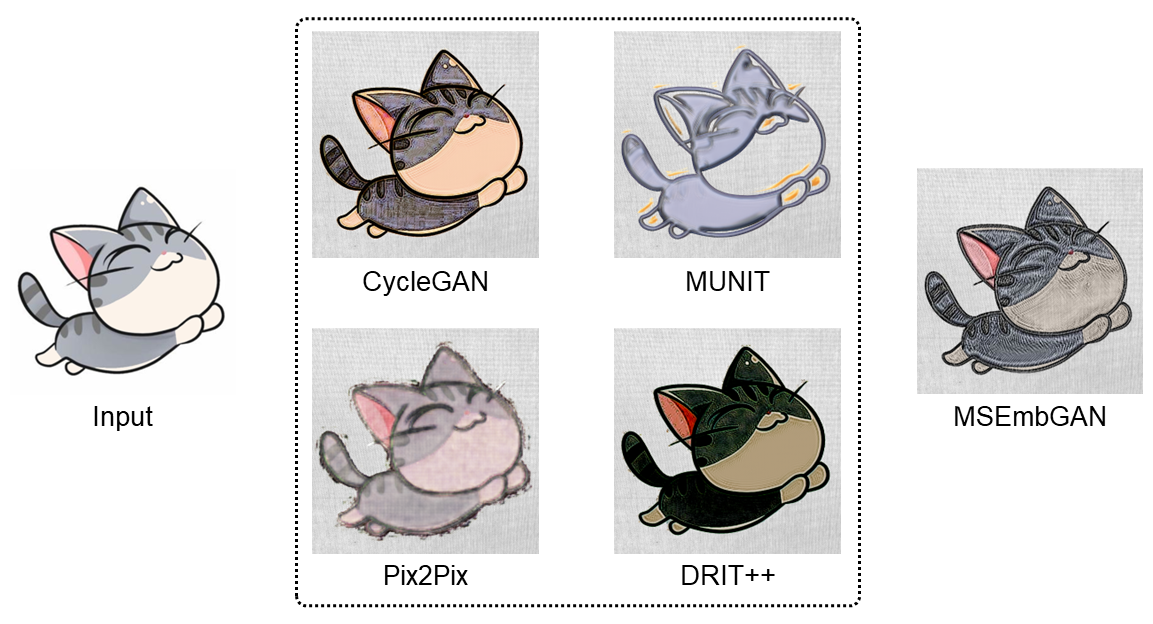

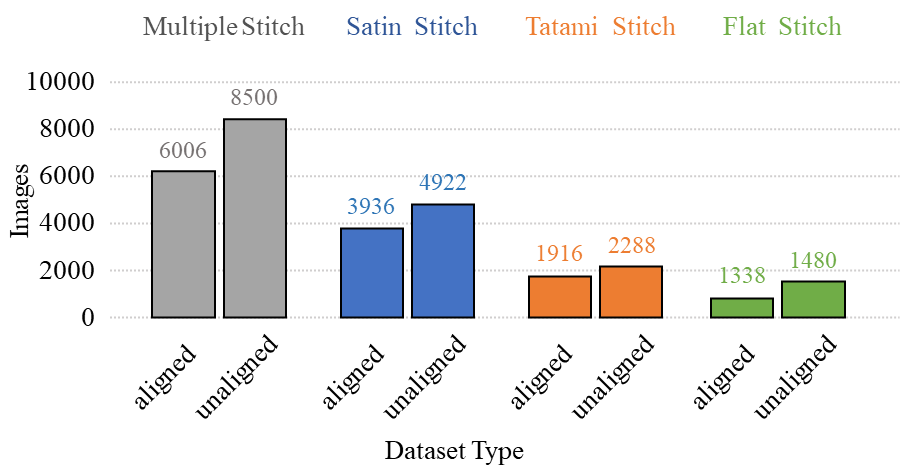

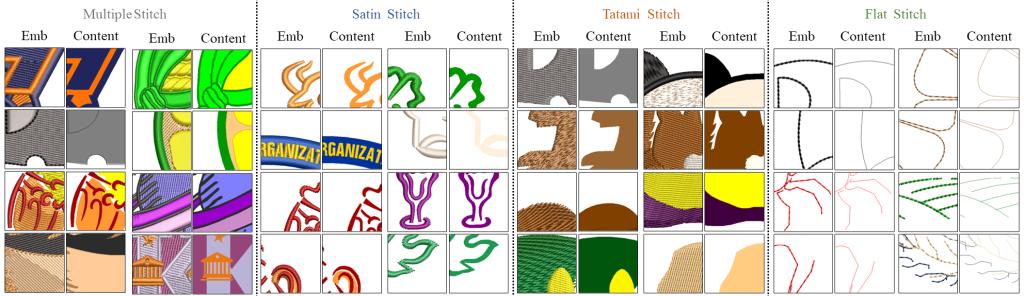

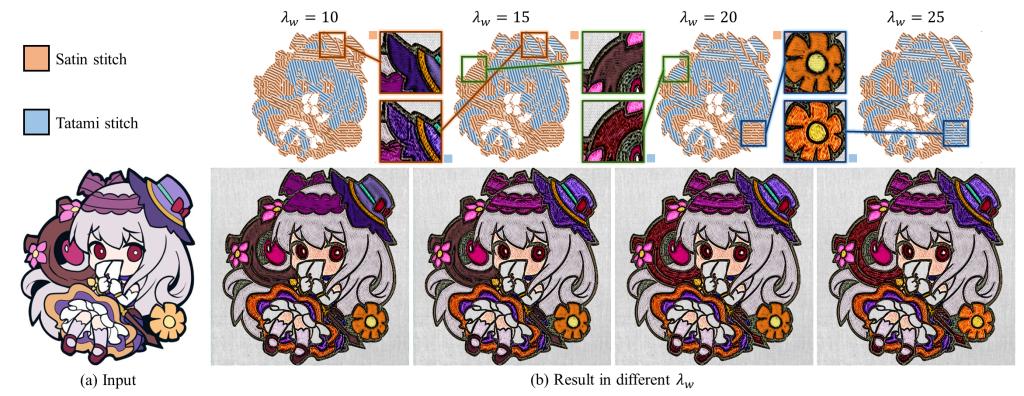

Although convolutional neural networks (CNNs) have been widely used in embroidery synthesis, these methods tend to fail in predicting diverse stitch types, which are often contained in real embroidery images. The reason behind is that these network architectures do not have an independent module to capture the stitch features of an embroidery image, and lack a multi-stitch embroidery dataset that is labeled with the stitch type. In this paper, we propose a multi-stitch embroidery generative adversarial network (MSEmbGAN) by considering the influences of stitch types on predicting embroidery image synthesis. To the best of our knowledge, our work is first convolutional neural network for synthesizing multi-stitch embroidery images. To achieve this, we devise the region-aware texture generation network. It detects multiple regions of the input image using a stitch classifier and generates stitch texture for each region based on its shape features. In addition, a structure colorization network with a structure discriminator is developed to further optimize the whole image by maintaining the structure consistency between input images and the output embroidery image with multiple stitches. Meanwhile, we present a multi-stitch embroidery dataset which is labeled with multiple stitch types (three single-stitch types and a multi-stitch type). To the best of our knowledge, this dataset is also the largest embroidery dataset currently: more than 30K high-quality multi-stitch embroidery images (over 13K aligned content-embroidery images and over 17K unaligned images). Experimental results including a user study show that our MSEmbGAN clearly outperforms both the current state-of-the-art traditional method on embroidery synthesis and the popular state-of-the-art style transfer methods.

1 Introduction Video

2 Comparision

2.1 Related works comparison

2.2 Quantitative evaluation

| Methods | LPIPS | FID |

| CycleGAN | 0.296 | 153.49 |

| Pix2Pix | 0.432 | 239.89 |

| MUNIT | 0.332 | 146.14 |

| DRIT++ | 0.305 | 171.15 |

| MSEmbGAN | 0.262 | 122.44 |

2.3 User study

| Methods | Embroidery quality (mean / std) | Structure quality (mean / std) | Image quality (mean / std) |

| CycleGAN | 2.670 / 0.848 | 2.659 / 0.905 | 2.739 / 0.865 |

| Pix2Pix | 2.696 / 0.810 | 2.937 / 0.750 | 2.438 / 0.878 |

| MUNIT | 2.367 / 0.819 | 2.751 / 0.812 | 2.731 / 0.822 |

| DRIT++ | 2.822 / 0.785 | 2.347 / 0.847 | 2.865 / 0.763 |

| MSEmbGAN | 3.834 / 0.721 | 3.734 / 0.702 | 3.711 / 0.714 |

3 Dataset

4 Stitch Distributions

- 附件【Dataset.zip】已下载次